When people refer to convolutional neural networks (CNN), most of them are about computer vision. Convolutional neural networks have indeed helped image classification and the core of computer vision systems to achieve important breakthroughs, such as Facebook's automatic photo tagging function, auto-driving vehicles and so on.

In recent years, we have also tried to solve problems in neurolinguistics (NLP) with CNN and have obtained some interesting results. Understanding the role of CNN in NLP is difficult, but its role in computer vision is easier to understand. So, in this article, we will talk about CNN from the perspective of computer vision and slowly transition to NLP. Going to the problem~

What is a convolutional neural network?

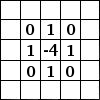

For me, the easiest way to understand convolution is to understand it as a sliding window function, just like a matrix. Although it is very mouthful, it looks very intuitive (as shown below, self-understanding):

ConvoluTIon with 3&TImes;3 Filter. Source:

Let us use our imagination, the matrix on the left represents a black and white image. Each square corresponds to one pixel, with 0 representing black and 1 representing white (generally, a computer vision processed image is a grayscale image with a grayscale value between 0 and 255). The sliding window is called a (convolution) kernel, a filter (translator: there is a feeling of a point filter) or a feature detector (translator: for feature extraction).

Now, we use a 3&TImes;3 filter to multiply its value with the value in the original matrix and then sum. In order to get the matrix on the right, we need to do one operation for each 3&TImes;3 submatrix in the complete matrix.

You may be wondering what this kind of operation does. There are two examples below.

Fuzzy processing

After averaging the pixels and surrounding pixels, the image is blurred.

(Translator: Similar to the value of a few pixels around it)

Edge detection

After making a difference between the pixel and the surrounding pixel values, the boundary becomes significant.

To better understand this problem, first think about what happens when a pixel is the same as a few pixels around a pixel-changing continuous image: those increments that result from mutual comparison disappear. The final value of each pixel will be 0, which is black. (Translator: Guess there is no contrast). If there is a strong boundary with a noticeable chromatic aberration, such as a white to black image boundary, then you will get a sharp contrast and get white.

There are more examples of this GIMP manual URL. (Translator: such as sharpening, edge enhancement, and so on, there seems to be a software that can do convolution retouching). If you want to know more, I suggest you check out this Chris Olah's post on the topic.

What is a convolutional neural network?

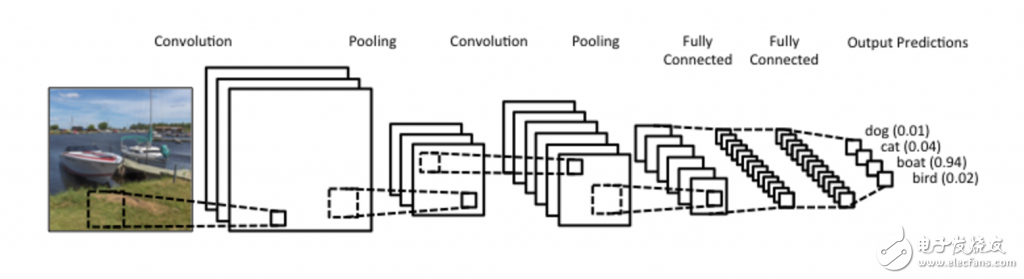

Now that you know what is convolution, what is a convolutional neural network? Simply put, CNN is a neural network that uses several convolutions of nonlinear activation functions (eg, ReLu, tanh) to get answers.

In a traditional feedforward neural network, we connect each output of each layer to each input of the next layer, which is also referred to as a fully connected layer or an affine layer. But this is not the case in CNN. Instead, we use convolution to calculate the output in the input layer (translator: convolution causes partial mapping, see previous convolution map), which results in local joins, an output value connected to the partial input. The inter-layer local spatial correlation connects the neuron nodes of each adjacent layer only to the upper-level neuron nodes that are close to it.

Each layer requires a different filter (usually tens of thousands), similar to the ones mentioned above, and then combines their results. There is also something called a pooling layer or subsampling layers, let's talk about it later.

During the training phase, CNN can automatically improve the parameter values ​​in the filter through the training set. For example, in the image classification problem, CNN can identify the boundary in the original pixel in the first layer, then use the edge to detect the simple shape in the second layer, and then use these shapes to identify the advanced image. Such as the shape of the face, the house (translator: feature extraction can be resolved by features). The last layer is to classify with advanced images.

There are two notable things in computing: Location Invariance and Compositionality.

Suppose you want to identify that there are trees and elephants on the image, and do a two-category (that is, two types of elephants and no elephants).

Our filter will scan the entire image, so don't worry too much about where the elephant is. In reality, pooling also keeps your image unchanged while panning, rotating, and scaling (the latter is more).

Combinatorial (local combination), each filter gets a part of the low-level image and combines it into a high-level image.

That's why CNN is so powerful in computer vision. CNN gives you an intuitive feel when you build edges from pixels, from edges to shapes, and from shapes to more complex objects.

CNN use in NLP

The input to most NLP questions is a matrix of sentences or documents compared to images and pixels. Each line of the matrix is ​​generally a word or a character (eg, a character). So, each line is a vector that represents a word. Usually each vector is an abandon word vector word embeddings (low latitude representation), such as word2vec or GloVe, which can also be one-hot vectors for representing words. For a sentence of 10 words, represented by a 100-dimensional embedding, we have a 10 × 100 matrix as our output, which is our "image".

In this way, our filter slides on small blocks of an image, but on the NLP problem, we use a filter to slide over each complete line of the matrix (that is, a word). Therefore, the width of the filter is generally the same as the width of the input matrix. Height and area size are generally different, but generally it is 2-5 words at a time.

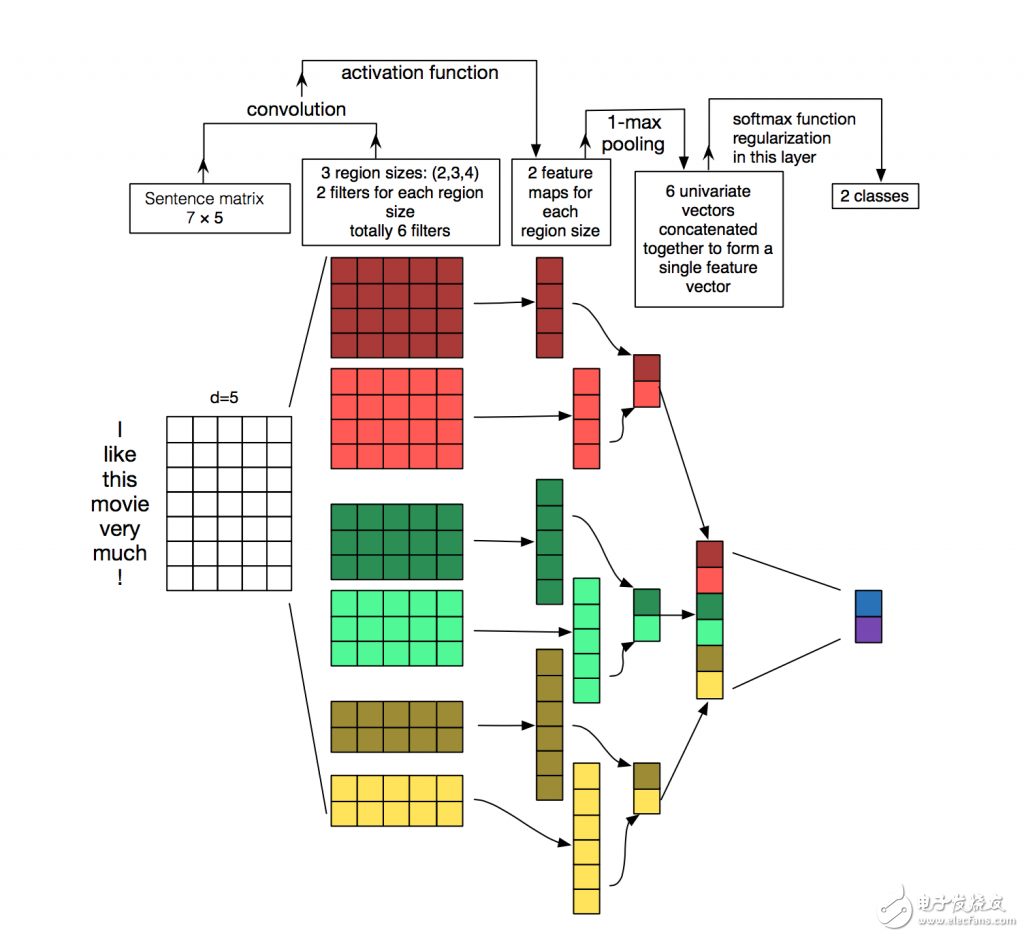

Link all of the above, then a CNN for NLP problems is sauce purple (take some time, try to understand the picture below, think about how each dimension is calculated, you can ignore the pool pooling now, I will talk about it later:)

A convolutional neural network structure diagram for sentence classification.

We have three filters with heights of 2, 3, 4 and two for each filter. Each filter is convolved in the sentence matrix and produces a feature map of varying lengths, then a 1-max pooling is run on each map, and the maximum value of each feature map is recorded. Thus, a single variable feature vector is generated from all six maps, which are concatenated to form a feature vector for the penultimate layer. The final Softmax layer receives these feature vectors as input and then uses it to classify the sentences; here we assume a two-category, which describes two possible output states.

Source: Zhang, Y., & Wallace, B. (2015). A Sensitivity Analysis of (and Practitioners' Guide to) Convolutional Neural Networks for Sentence Classification.

So can we have an intuitive feeling like computer vision? Local Invariance and Local Compositionally produce an intuitive feel for image processing, but these are not particularly intuitive for NLP issues. You may pay more attention to where in a sentence a word appears. (There is a question here, because I will talk about it later, I don’t care where it is, but I care if it has appeared.)

Pixels that are close together are very likely to have a 'semantic' connection, such as a part of an object, but for a word, the above rules are not always correct. In most languages, a phrase can be divided into several isolated parts.

Again, the characteristics of the combination are not particularly noticeable. We know that there must be specific combinations between words in order to connect with each other, just as adjectives are used to modify nouns, but how these rules work, what does higher-level expression mean, is not like in computer vision. That's obvious, so intuitive.

Under such circumstances, CNN does not seem to be particularly suitable for the processing of NLP problems. However, RNN can give you a more intuitive experience. They symbolize how we organize our language (or, at least, how we organize our language in our minds): read from left to right. Fortunately, this does not mean that CNN is useless. All models have errors, but they don't mean they are useless. It is just that the practical application proves that CNN is quite good for solving the NLP problem. The simple bag model, the Bag of Words model, is an oversimplified model based on false assumptions, but it has been used as a standard method for many years and has achieved good results.

One of the controversies about CNN is their speed, they are fast, very fast. Convolution is a core part of computer graphics and is implemented at the hardware level on the GPU (you can imagine how fast this is). Compared to things like N-Grams, CNN is extremely efficient at expressing words or categories. When dealing with a large number of vocabularies, the data overhead of fast operations beyond 3-Grams is huge. Even Google can't calculate more than 5-Grams data. But the convolution filter can automatically learn a good expression without having to redundantly represent all the words. Therefore, it can have a filter with a size greater than 5. I think, designing so many automatic learning filters in the first layer, they extract features like (but not limited to) N-Grams, but they are represented in a more concise and efficient way.

CNN's super-parameter (HYPER-PARAMETERS)

Before explaining how CNN works for NLP issues, let's take a look at what options are needed to build CNN. I hope this will help you better understand the literature in this field.

Barrier Strip Connector ,Barrier Type Terminal Block ,Dual Row Terminal Block ,Barrier Terminal

Cixi Xinke Electronic Technology Co., Ltd. , https://www.cxxinke.com