Machine learning has been widely used in a wide range of industries, and has contributed greatly to improving the efficiency of business processes and increasing productivity. This article mainly introduces eight different architectures of neural network, one of the most advanced algorithms in machine learning, and interprets it from the principle and scope of application. Machine learning and neural networks are so good, let's first explore two questions – why do we need machine learning? Why use a neural network? Then come to learn more about eight different network architectures.

First, why do you need machine learning?

Generally speaking, the task that is very complicated for human beings is where machine learning comes into play. Those problems are too complicated, and it is unrealistic or impossible for humans to solve them. So people came up with a way to provide a large amount of data for machine learning algorithms, and let the algorithm explore the data and search for a model that can describe the data to achieve the goal of solving the problem.

Let's look at two small examples:

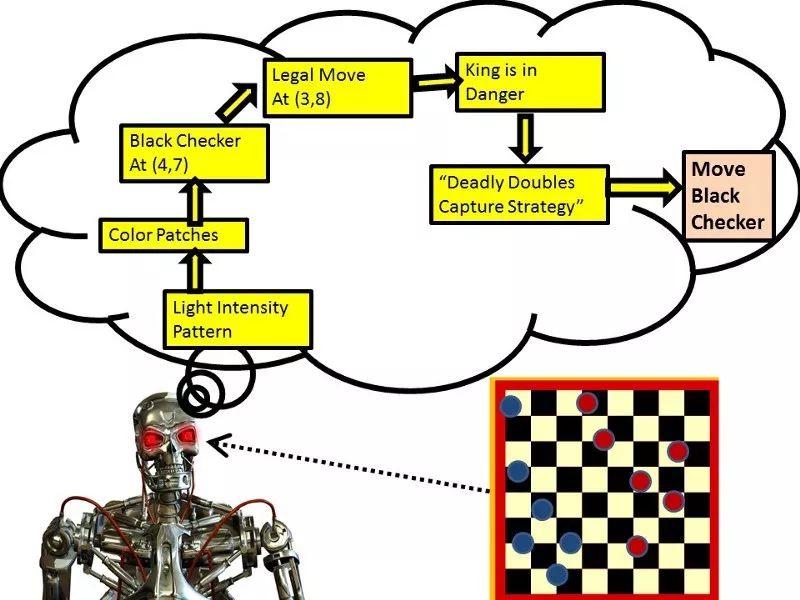

It is extremely difficult to write a program that recognizes a new object under different lighting conditions, from a new perspective, and in a messy environment. We don't know how to write this program, how to model it, because we don't know how our brain handles this situation. Even if we can think of a way, the program will be so complex to an incredible degree!

It is also very difficult to write a program that detects credit card fraud. There are almost no simple and reliable rules. We need to implement a series of weak rules. When the fraud target changes, the program also needs to adapt to changes.

There are plenty of examples that make us helpless, so machine learning becomes an effective method. We are not writing programs to solve a specific task, but collecting a lot of input-output data for the problem that needs to be solved. Machine learning algorithms can use this data to generate programs that solve problems. These programs, unlike traditional code, are made up of thousands of parameters. If our modeling is effective, the program will get the same performance as the training data on the new data, and new training data can be entered to adapt the program to the new changes. It should be noted that the cost of large-scale computing is now cheaper than that of experienced programmers, which is why economic machine learning has developed.

At present, machine learning is mainly applied in the following aspects:

Pattern recognition: targets in actual scenes, including faces, expressions, speech recognition, etc.;

Anomaly detection: for example, abnormal detection of credit card transactions, sensor abnormal data pattern detection, and abnormal behavior detection;

Forecast: Forecast stocks or exchange rates, or predict movies, music, etc. that consumers like.

Second, what is a neural network?

Neural network is a general term for a class of machine learning algorithms and models, and it is also the fastest growing field of machine learning. Inspired by the structure of the biological brain, it is derived from the most advanced deep neural network, which can handle the extremely complex mapping process between input and output. The neural network has the following three characteristics that make it an important part of machine learning:

A process that helps understand the actual work of the brain,

The concept of adaptive linking and parallel computing that helps understand neurons and periods is quite different from sequential processing of sequence models;

Inspired by the brain, new algorithms are used to solve practical problems.

In order to systematically learn machine learning, Wu Enda's course is very helpful for getting started with machine learning. Further, you can learn the neural network course called "Deep Learning Godfather" Hinton to further understand the neural network.

In the following sections, we will elaborate on eight common neural network architectures, which should be familiar and familiar to every machine learning practitioner and researcher.

The architecture of neural networks is divided into three main categories—feedforward, cyclic, and symmetrically linked networks.

The feedforward network is the most common architecture. The first time is responsible for input, the last layer is responsible for output, and the middle is called hidden layer. If there is more than one layer of hidden layer, it is called a deep structure. Each layer can be thought of as a transformation and activated with a nonlinear activation function after the transformation.

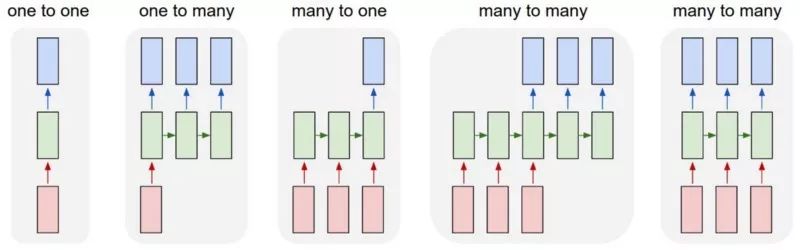

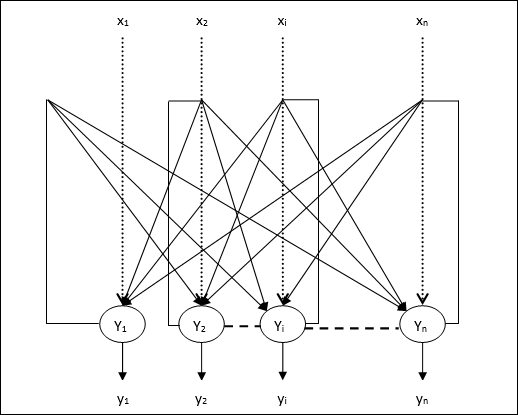

Recurrent Neural Networks (RNNs): A loop is represented in the connection graph. Their dynamic characteristics are very complicated and difficult to train, but more realistically reflects the real situation of the organism. There is a lot of work in finding more efficient ways to train the web. Such a network is very efficient for processing sequence data, where each cell is similar to a neuron in a deep network, but it takes the same weight in each time slot and receives input in each time slot. They also have the ability to use hidden state memory information.

A symmetric connection network is similar to a cyclic network, but the links between cells are symmetric. They follow the energy function to make it easier to analyze. A symmetrically connected network without a hidden layer becomes a Hopfield network, and a hidden layer is called a Boltzmann machine (see the Hinton course content for details)

Three or eight core neural network architectures

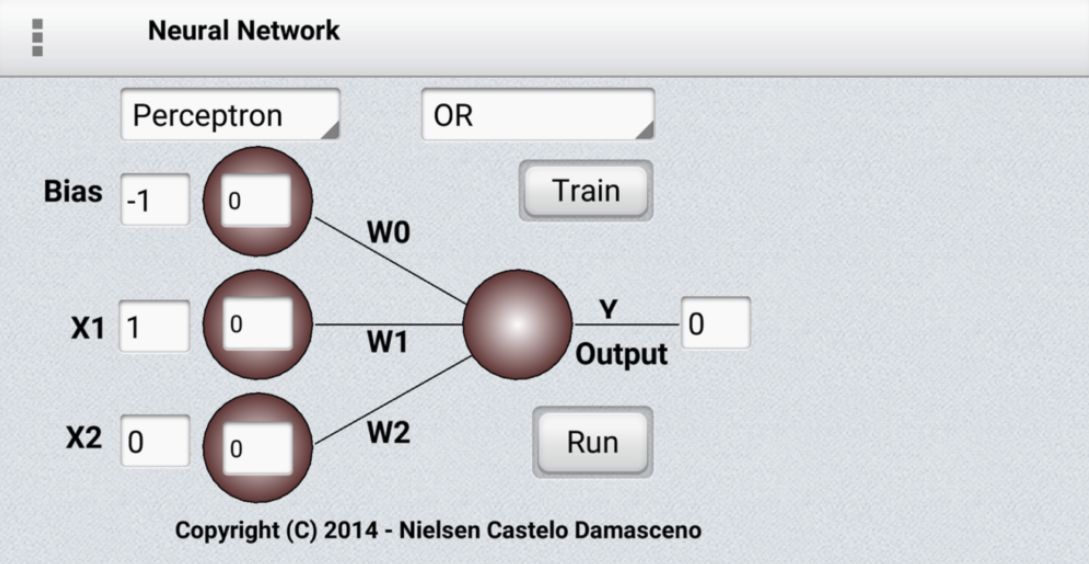

1. Perceptrons (Perceptrons)

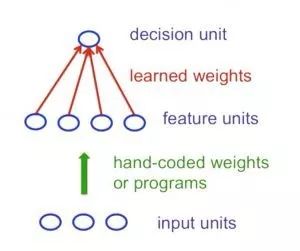

The perceptron can be called the first generation neural network, which mainly includes multiple feature units (manual definition or program search) input, which are connected by learning weights and finally output by the decision unit. A typical perceptron unit follows a feedforward model, and the input is directly connected to the output unit after weight processing.

If people manually select enough valid features, the perceptron can do almost anything. But once the artificial features are fixed, it will greatly limit the learning ability of the perceptron. At the same time, how to choose a good and effective feature is also a difficult problem that plagues people. This is devastating for the perceptron, since the perceptron puts all of its attention on pattern recognition and ignores the transformation process. Minsky and Papert's "group invariance theory" point out that learning cannot be used to identify a set of changing processes. In order to handle such transformations, the perceptron needs to utilize multiple feature units to identify the transformation. The most complicated step in pattern recognition requires manual extraction of features rather than learning.

Neural networks without hidden layers have significant limitations in modeling input and output mapping. The linear unit of more layers does not seem to help, because the linear superposition is still linear. A fixed nonlinear output is also not sufficient to establish a complex mapping relationship. Therefore, on the basis of the perceptron, we need to build a multi-layered hidden cell network with adaptive nonlinearity. But how do we train such a network? We need an effective way to adjust the weight of all layers, not just the last layer. This is very difficult, because learning the hidden layer's weight is equivalent to learning characteristics, but no one will tell you what each hidden unit should do. This requires a more advanced structure to deal with!

2. Convolutional Neural Networks

The field of machine learning has been exploring object recognition and detection for many years. The problem is always unresolved because the following problems have always plagued the visual recognition of objects:

Segmentation, occlusion problem

Illumination change problem

Distortion and deformation problems

Shape change of the same kind of object under functional difference

Difficulties caused by different perspectives

Problems caused by dimensional scale

These problems have been plaguing traditional pattern recognition. People explain the creation of various features by hand to describe the characteristics of objects, but the results are not always satisfactory. Heat is in the field of object recognition, and slight changes can make a huge difference in results.

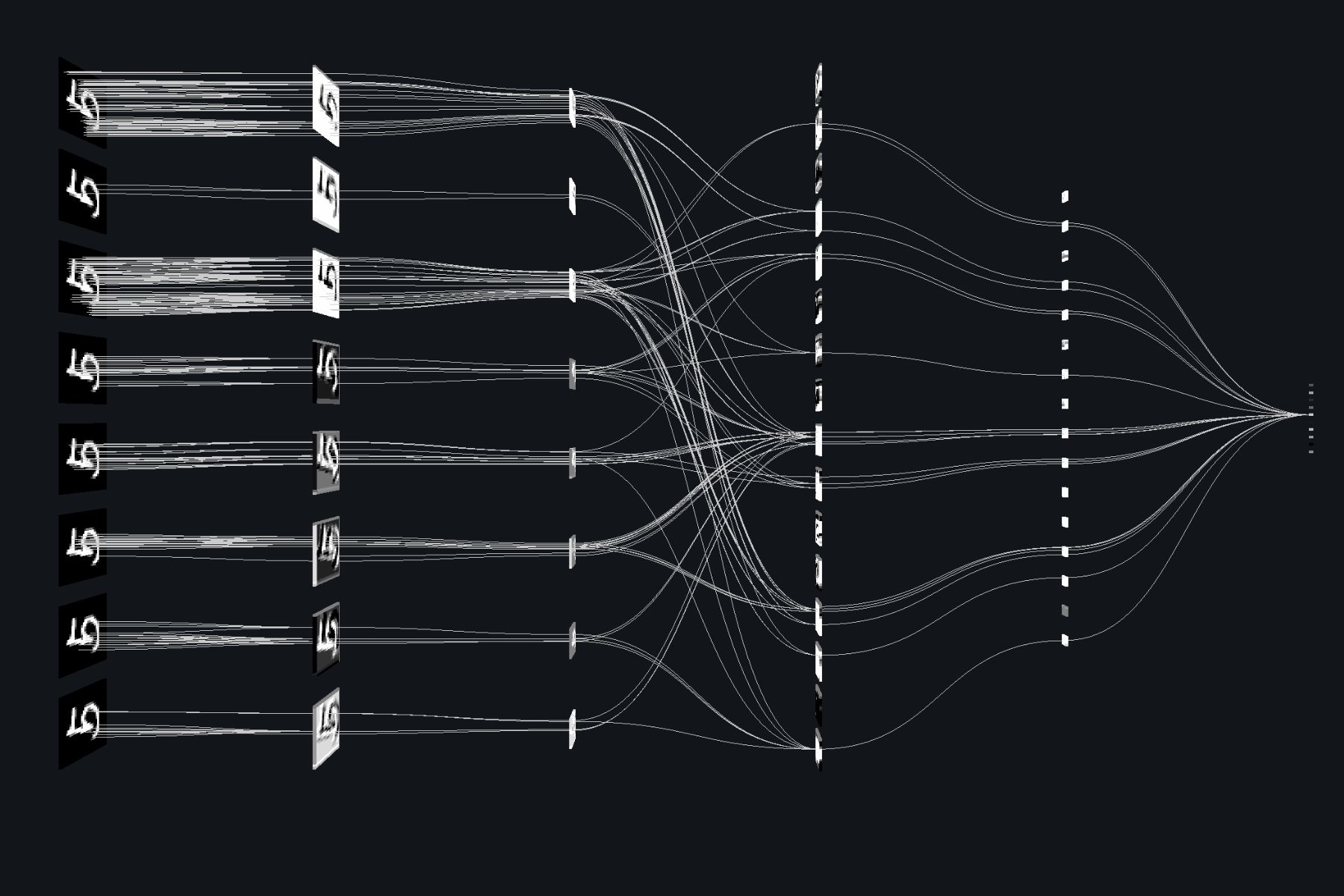

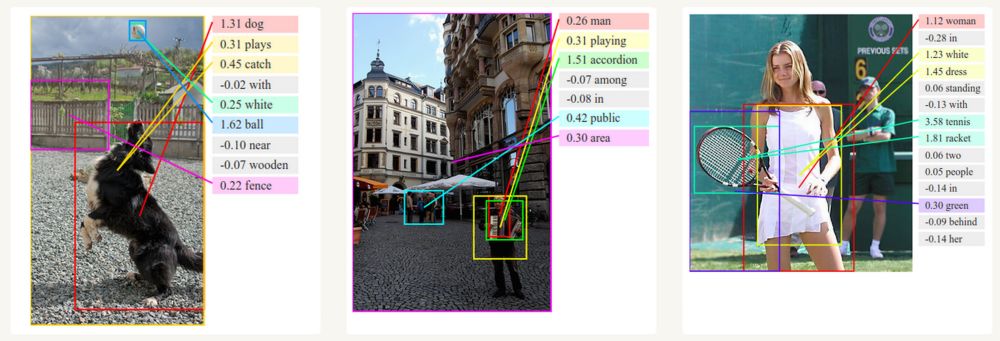

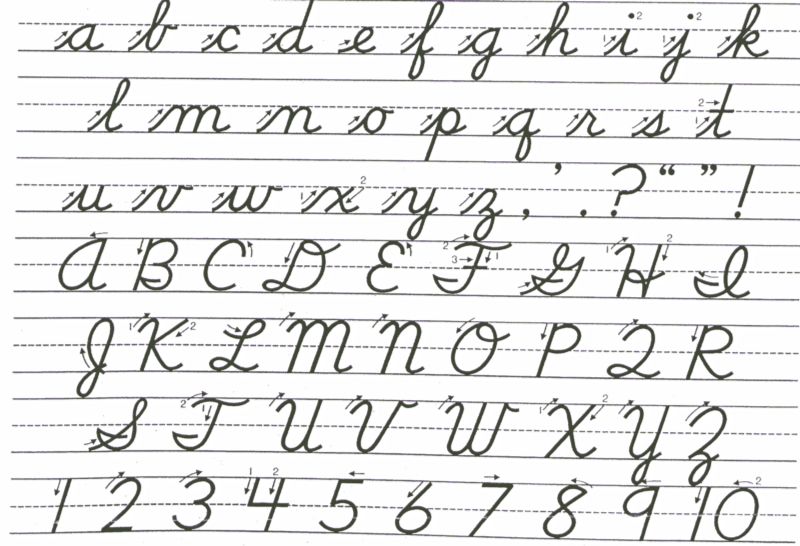

Visualization of graph convolutional networks

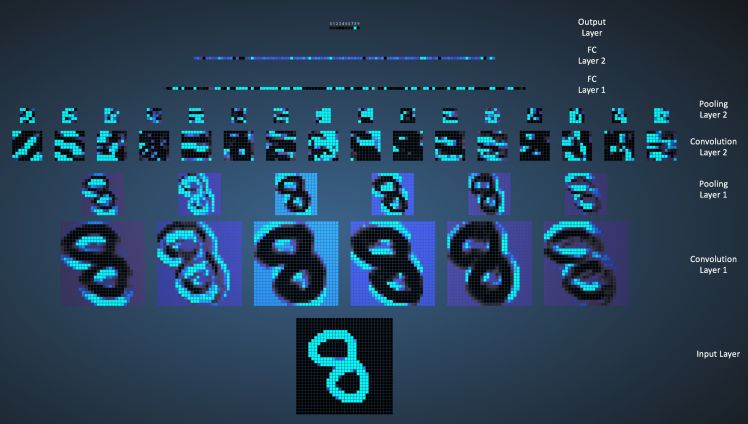

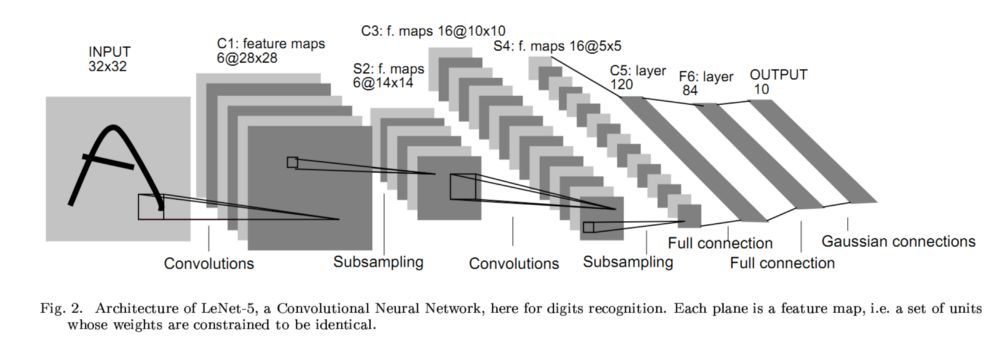

On the basis of perceptron and multi-layer perceptron, a new network structure, convolutional neural network, is proposed. Convolutional neural networks can be used to share the detection of some features and have some invariance in scale and position and direction. An earlier famous example was the 1998 Hann LeCun's network called LeNet for handwritten character recognition that was a huge success. The following figure shows the main structure of LeNet: a six-layer network structure that includes convolution, pooling, and full connectivity.

It uses the back propagation algorithm to train the cell weights of the hidden layer, and realizes the convolution operation (convolution kernel) weight sharing in each convolution layer, and introduces the pooling layer to realize the feature polycondensation ( The latter network layer has a larger receptive field, and finally the output is achieved through the fully connected layer.

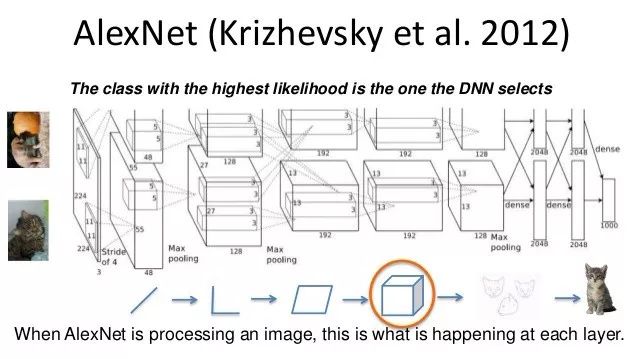

Then came the ILSVRC 2012 game, and ImageNet provided 1.2 million HD training data to train a model that can classify the probability that the image belongs to each of the thousand categories, and use this to identify the image. . Hinton student Alex Krizhevsky won the final. Based on LeNet, the neural network was improved, and a deep hidden network with 7 hidden layer depths was trained. The GPU was introduced for parallel training, which greatly improved the training efficiency of the deep learning model. Since then, the GPU has entered the field of vision of machine learning researchers. Far more than the second place shows the powerful charm of deep learning, and also makes deep learning begin to enter the fast lane of rapid development.

3. Recurrent Neural Network

Cyclic neural networks are mainly used to process sequence data. In the field of machine learning, sequence models typically use sequence data as input to train a sequence model for predicting the next item of sequence data. Prior to circulating neural networks, such tasks were primarily handled using a memoryless model.

The recurrent neural network is a very powerful weapon that contains two important features. First, having a series of implicit state distributions can efficiently store past information; secondly, it has nonlinear dynamics that allow it to update hidden states in complex ways. With enough time and number of neurons, the RNN can even calculate anything that a computer can calculate. They even show signs of vibration, traction and chaos.

However, the training of the circulating neural network is complicated, and it needs to face the problem that the gradient disappears or explodes. Since the trained RNN is a very long sequence model, the gradient during training is very prone to problems. Even at very good initial values, it is difficult to detect the relationship between the current target and many previous pre-step inputs, so it is still very difficult for the cyclic neural network to handle long-range dependencies.

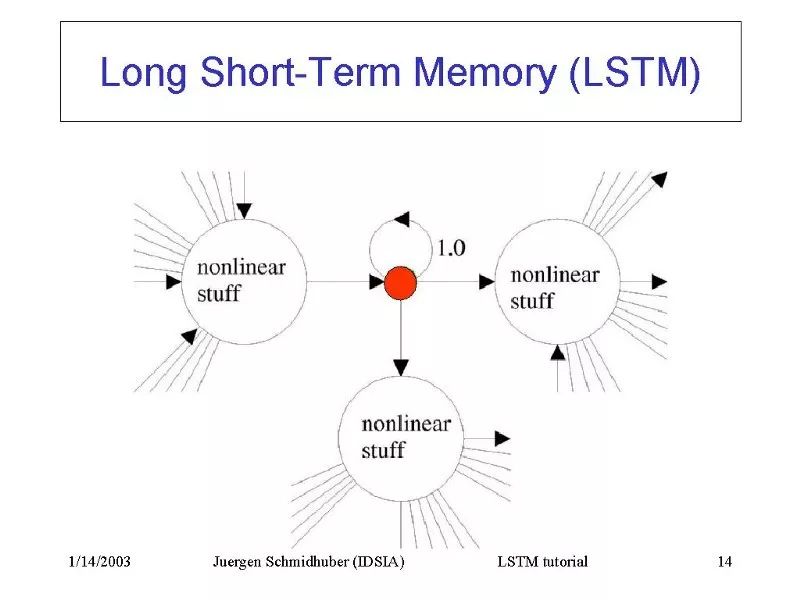

There are currently four effective ways to implement a recurrent neural network, including Long Short Term Memory, Hessian Free Optimization, Echo State Networks, and the use of momentum initialization ( Good initialization with momentum)

4. Long/Short Term Memory Network

Hochreiter and Schmidhuber (1997) solved the problem of long-term memory (such as hundreds of time steps) of RNN by constructing Long Short Term Memory (LSTM). They use interacting logic and linear units to design specialized memory cells. When the "write" door is open, the information can enter the storage cell. As long as the "hold" door is on, the information remains in the storage cells. Reading the information from the cell by turning on the "read" door: RNN is especially suitable for tasks such as handwritten recitation. Usually, the pen point coordinates x, y and the parameter p indicating whether the pen is up or down are input, and the output is a sequence of characters. Graves and Schmidhuber (2009) combined LSTM into RNN to get the best results from current cursive recognition. However, they use small image sequences instead of nib coordinate sequences as input.

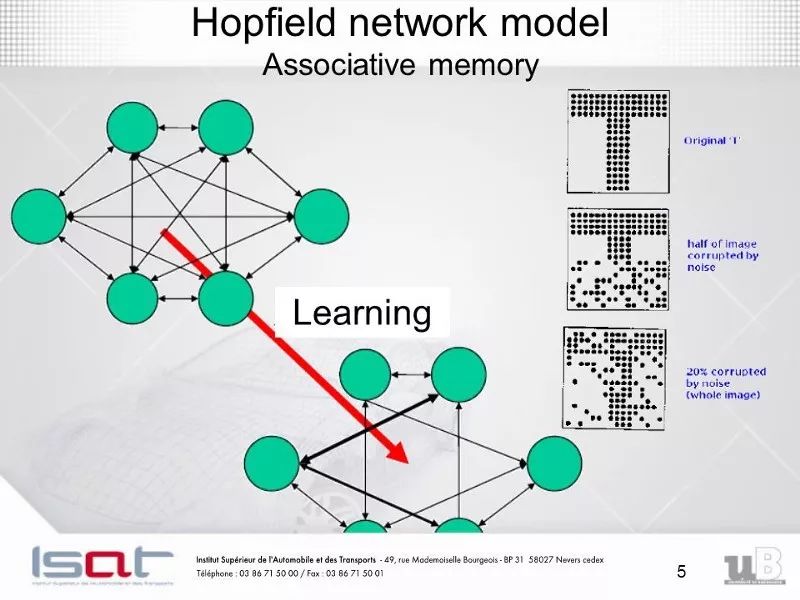

5. Hopfield Network

Cyclic networks with nonlinear elements are often difficult to analyze, and they can be expressed in many different ways: steady state, oscillation, or following unpredictable chaotic trajectories. Hopfield networks consist of repeated connections of binary threshold units. In 1982, John Hopefield realized that if the connection is symmetrical, then there is a global energy function. The "configuration" of each binary unit of the entire network corresponds to the energy of more or less. The threshold decision rule of the binary unit will make the configuration of the network proceed in the direction of minimizing the energy function. A neat way to use this type of calculation is to use memory as the energy minimum for neural networks, and memory with minimal energy values ​​provides a memory-associated memory (CAM). Projects can be accessed only by knowing a part of their content, which can effectively deal with hardware damage.

Whenever we remember a configuration, we want to get a new energy minimum. But what if there are two minimum values ​​at the same time? This limits the capabilities of the Hopfield network. So how do we increase the capabilities of the Hopfield network? Physicists like to use existing mathematical knowledge to explain how the brain works. Many papers on the Hopfield network and its storage capabilities are published in the journal Physics. In the end, Elizabeth Gardner discovered a better storage rule that uses the full capacity of the weights. Instead of storing all the vectors at once, she loops through the training set multiple times and uses the perceptron convergence process to train each unit to have the correct state, giving the state of all other elements in the vector. Statisticians call this technique "quasi-likelihood estimation."

The Hopfield network has another computing role. Instead of using the network to store memory, we use it to construct the interpretation of sensory input, express the input in visible units, express the state with the state of the hidden layer nodes, and use energy to express the quality of the interpretation.

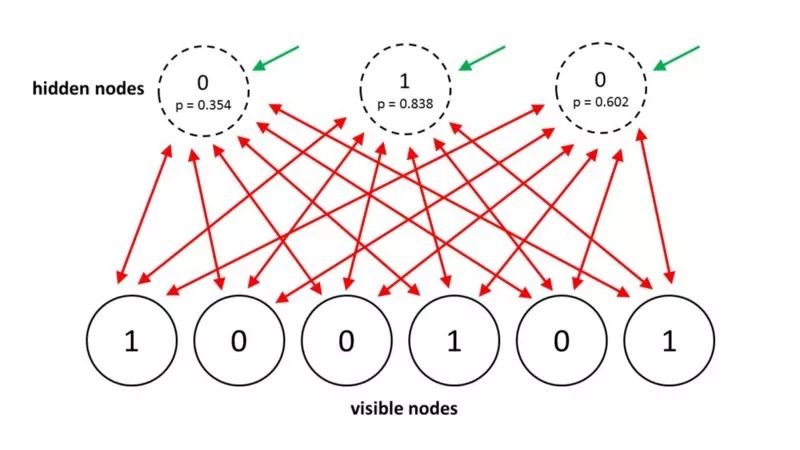

6. Boltzmann Machine Network

The Boltzmann machine is a stochastic recurrent neural network that can be considered as a randomly generated Hopfield network. It is also one of the first neural networks to learn internal representations, to represent and solve difficult combinatorial problems.

The learning goal of the Boltzmann machine learning algorithm is to maximize the probability product that the Boltzmann machine assigns to the binary vector in the training set. This is equivalent to maximizing the sum of the logarithmic probabilities that the Boltzmann machine assigns to the training vector. If we 1) let the network stabilize to N for a smooth distribution of time without external input; 2) Each time the visible vector is sampled, it can be understood as maximizing the probability that we get N samples.

In 2012, Salakhutdinov and Hinton proposed a high-efficiency small batch learning program for Boltzmann machines. For the forward direction, the hidden probability is first initialized to 0.5, then the data vector on the visible unit is fixed, and then all hidden units are updated in parallel until convergence is achieved using the average field update. After the network converges, PiPj is recorded for each connected unit pair and all data is averaged in the smallest batch. For the reverse: First keep a set of "fantasy particles", the value of each particle is globally configured. Then update all the cells in each fantasy particle several times. For each connected unit pair, SiSj is averaged over all fantasy particles.

In a normal Boltzmann machine, random updates of cells are ordered. There is a special architecture that allows for more efficient alternating parallel updates (no connections within the layer, no cross-layer connections). This small batch program makes the update of the Boltzmann machine more parallel. This is the so-called Deep Boltzmann Machine (DBM), a regular Boltzmann machine with many missing connections.

In 2014, Salakhutdinov and Hinton proposed an update for their model, called Restricted Boltzmann Machines. They limit connectivity to make reasoning and learning easier. There is only one layer in the hidden unit, and there is no connection between the hidden units. In a restricted Boltzmann machine, when the visible unit is fixed, only one step is required to achieve thermal equilibrium. Another effective small batch RBM learning program is this: For the forward direction, the data vector of the visible unit is first fixed. Then calculate all visible and hidden cell pairs

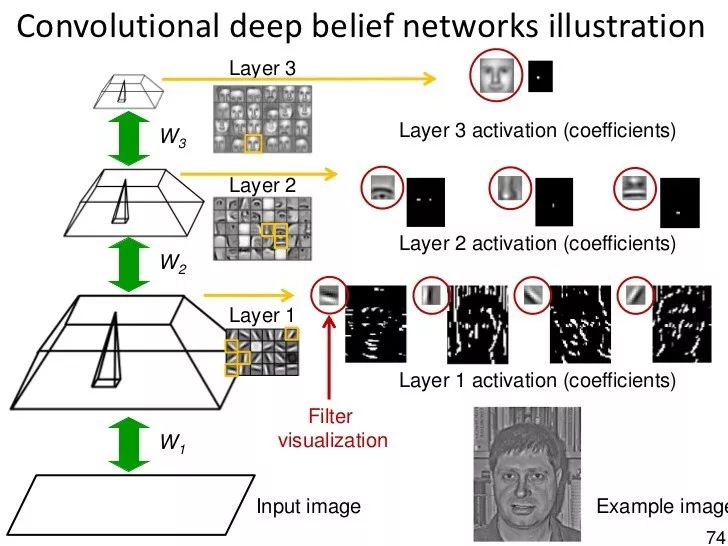

7. Deep Belief Network

Backpropagation is considered to be the standard method of artificial neural networks, and after processing the data, the error contribution of each neuron is calculated. However, there are some obvious problems with using backpropagation. First of all, the data it needs is to be labeled and trained, but almost all the data in real life is not marked. Second, its learning is not very malleable, which means that in a network with multiple hidden layers, its learning time is very slow. Third, it is likely to be trapped in a locally optimal position, which is far from optimal for deep networks.

To overcome the above limitations of backpropagation, researchers have considered using unsupervised learning methods. This helps to maintain the efficiency and simplicity of using the gradient method to adjust the weights, and can also be used to model the structure of the sensory input. In particular, the input probability of the generated model can be maximized by adjusting the weights. Then the question comes, what kind of generation model should we learn? Can it be based on energy like the Boltzmann machine? Is it a very ideal causal model consisting of neurons, or a mixture of the two?

Belief net (belief net) is a directed acyclic graph composed of random variables. With the belief network, we want to observe some variables and want to solve two problems: the problem of reasoning - inferring unobserved state variables, and the problem of learning - adjusting the interaction between variables to make the network easier to generate Training data.

Early graphic models used experts to define graphical structures and conditional probabilities. At the time, the graphics were sparsely connected; therefore, the researchers initially focused on making the right inferences, not learning. The neural network is learning-centered, and the knowledge of self-deprecating is not cool, because knowledge comes from learning training data. The purpose of neural networks is not to facilitate explanations, nor to make reasoning simple. But even so, there is a neural network version of the belief network.

There are two types of generated neural networks composed of random binary neurons. One is based on energy, on the basis of which we use a symmetric connection to connect binary random neurons to a Boltzmann machine. The other is based on causality. We connect binary random neurons in a directed acyclic graph to obtain an s-type belief network. The specific description of these two types will not be described again.

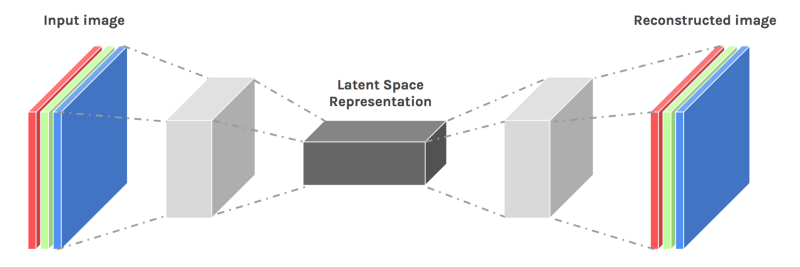

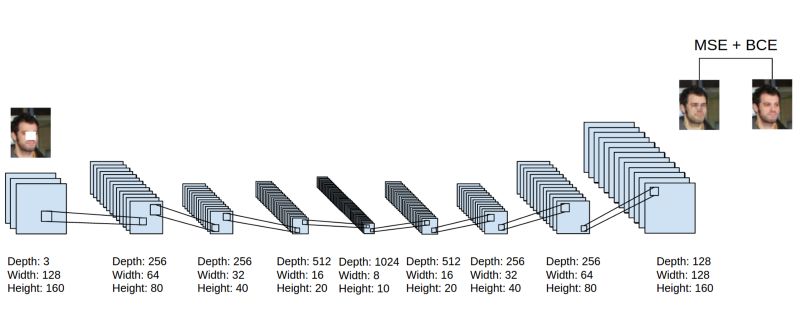

8. Deep Auto-encoders

Finally, let's talk about deep auto-encoders. For some reason, they always seem to perform very good dimensionality reduction because they provide a flexible mapping of the two approaches. The learning time is linear (or better) in the number of training goals. The final coding model is quite compact and fast. However, using backpropagation to optimize the depth autoencoder is very difficult. The initial weight is small and the post-propagation gradient disappears. We now have a better way to optimize them: either use unsupervised layered pre-training or carefully initialize weights as in an echo state network.

There are three different types of shallow auto-encoders for pre-training tasks:

RBM

Denoising automatic encoder

Compressed automatic encoder

Simply put, there are many different ways to perform layer-by-layer pre-training on features. For data sets that do not have a large number of tagged cases, pre-training facilitates subsequent identification learning. For very large, tagged data sets, it is not necessary to initialize the weights used in supervised learning for unsupervised pre-training, even for deep networks. So pre-training is the first and most important way to initialize the weight of the deep network. Of course, there are other methods. But if we make the network bigger, we will need to train again!

having said so much...

Neural networks are one of the most beautiful programming paradigms of all time. In traditional programming methods, we tell the computer what to do, breaking down the big problem into many small, well-defined tasks that the computer can easily perform. In contrast, in neural networks, we don't tell computers how to solve our problems. Instead, it learns from observations and finds out how to solve it.

Today, deep neural networks and deep learning have achieved remarkable results on many important issues such as computer vision, speech recognition, and natural language processing. They are widely used in the deployment of companies such as Google, Microsoft and Facebook.

I hope this article will help you learn the core concepts of neural networks!

12 Coaxial Speaker,Coaxial Speaker 12 Inch,12 Inch Coaxial Speaker,Pro Audio Coaxial Speakers

Guangzhou Yuehang Audio Technology Co., Ltd , https://www.yhspeakers.com